In today’s digital world, cloud computing has completely transformed business operations, offering unmatched scalability, flexibility, and cost efficiency. Amazon Web Services (AWS) is the world’s leading cloud platform at the heart of this revolution, powering everything from startups to global enterprises.

However, choosing the correct AWS programming language is key to fully leveraging AWS. The language you use can impact performance, scalability, and how smoothly your applications integrate with AWS services. This guide will explore the best programming languages for AWS cloud development and help you decide which fits your needs.

1. 10 Best AWS programming languages for cloud computing

When working with AWS programming language, choosing the correct language can make a big difference in performance, scalability, and ease of development. Let’s look at some of the best programming language for cloud computing and how they’re used.

1.1. Python

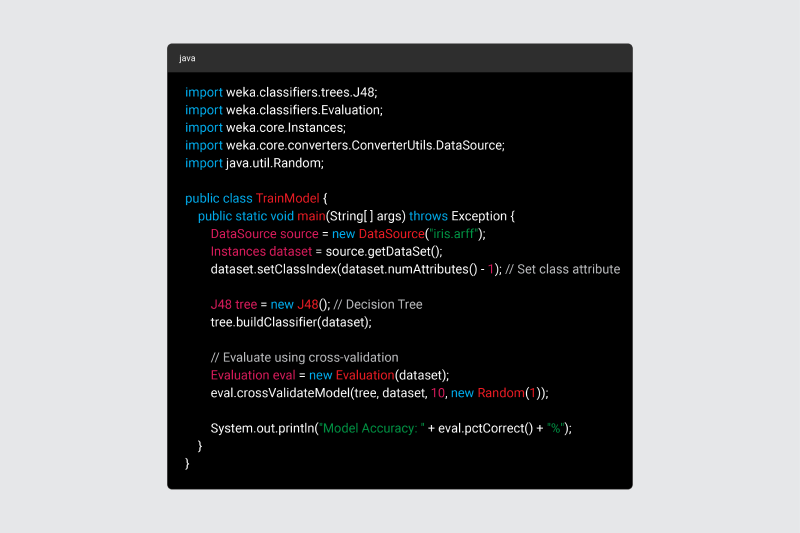

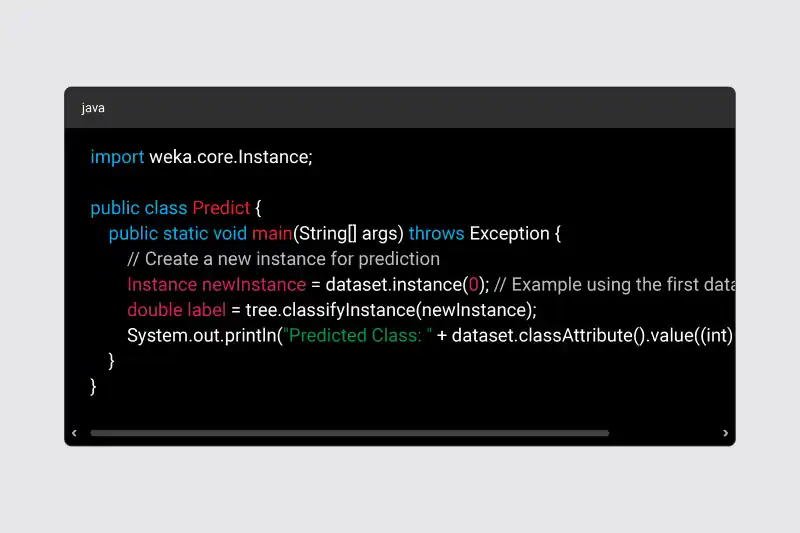

Python is one of the most popular AWS programming languages thanks to its simplicity, versatility, and extensive library support. It’s widely used for machine learning (ML), data analysis, automation, and serverless computing. Frameworks like TensorFlow, PyTorch, and AWS Chalice make it a go-to for AI-driven applications. Plus, its strong community support means you’ll always find resources to help. Python works seamlessly with AWS services like AWS Lambda, Amazon S3, and Amazon EC2, making it an excellent choice for cloud development.

Read more >>>> TOP 10 Best GUI Library for Python in 2025 – Developer Should Know

1.2. Java

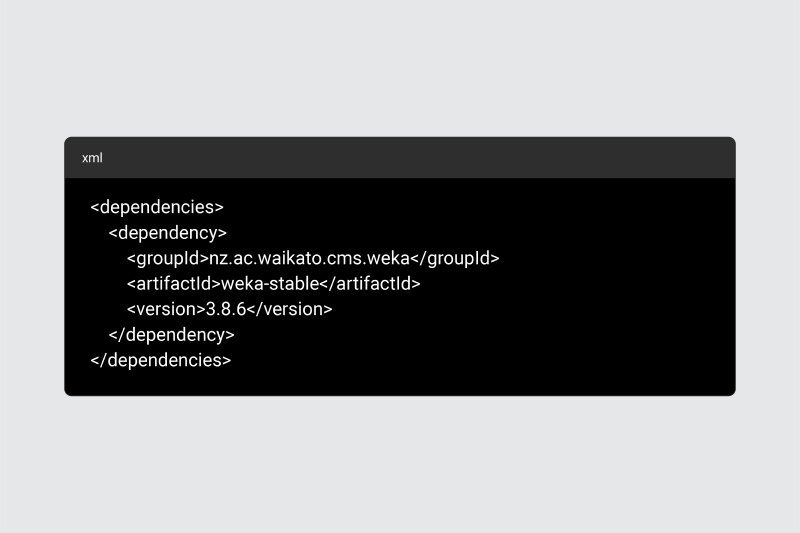

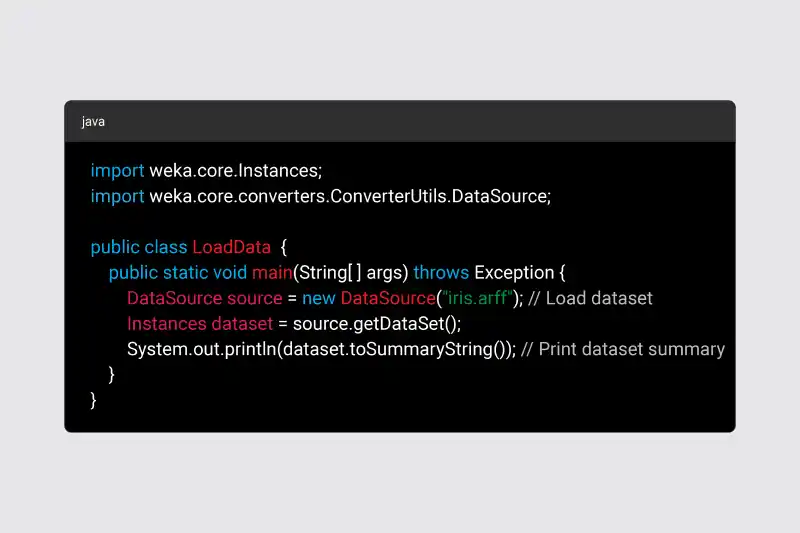

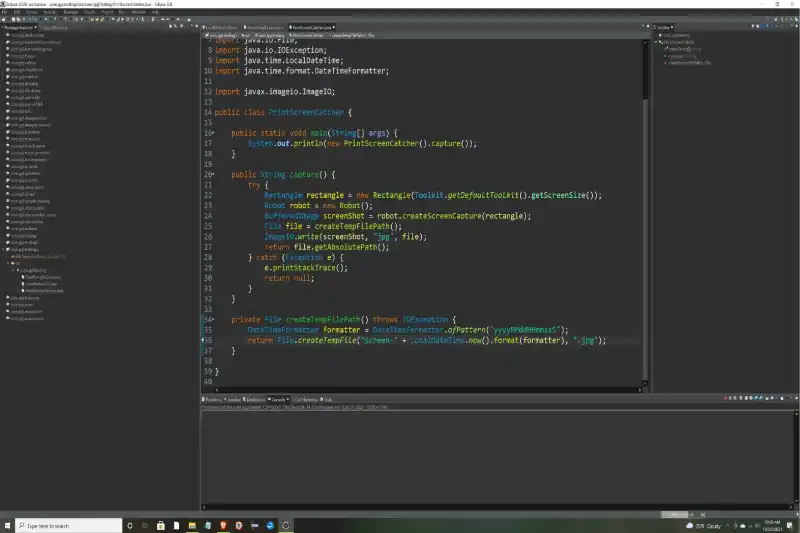

Java is a powerhouse for enterprise-level cloud applications, known for its reliability, performance, and security. It’s widely used in large-scale AWS applications, especially when building scalable, high-performance backend systems. With its strong ecosystem and support for multi-threading, Java integrates well with Amazon EC2, AWS Elastic Beanstalk, and AWS Lambda, making it ideal for businesses that need stability and scalability.

Read more >>> TOP 12 Best Java Machine Learning Libraries 2025

1.3. JavaScript

JavaScript, particularly with Node.js, is a favorite for cloud-based applications, APIs, and serverless computing. Its event-driven, non-blocking architecture makes it perfect for real-time applications and microservices. Developers often use it with AWS Lambda, Amazon API Gateway, and DynamoDB, making it a strong choice for web-based cloud applications and backend services.

1.4. Go

Go (or Golang) is gaining traction in cloud-native development thanks to its speed, efficiency, and built-in support for concurrent processing. It’s particularly popular in the DevOps community and is used to build high-performance cloud applications. AWS developers often use Go for containerized applications on Amazon ECS and EKS and for building serverless functions with AWS Lambda.

1.5. Ruby

Ruby is well-known for its simplicity and flexibility, making it a great choice for cloud-based web applications. Many developers use Ruby on Rails to build scalable apps that run on AWS. It’s commonly paired with AWS Elastic Beanstalk, allowing easy deployment and management.

Read more >>> Top 18 Web Programming Languages You Need to Know

1.6. Nodejs

Node.js is a top choice for AWS language for cloud development, especially for serverless applications, APIs, and real-time services. Thanks to its asynchronous, event-driven architecture, it’s highly efficient for handling multiple requests simultaneously, making it ideal for microservices and backend development. Developers frequently use Node.js with AWS Lambda, Amazon API Gateway, DynamoDB, and AWS Fargate to build scalable, cost-effective cloud solutions. Its lightweight nature and the large ecosystem of npm packages make it a powerful tool for modern cloud applications.

1.7. .NET

For enterprises already invested in Microsoft technologies, .NET is a natural fit for AWS development. It provides a robust framework for building secure, enterprise-grade applications. Developers can leverage AWS SDK for .NET and services like Amazon EC2 and AWS Lambda to build scalable cloud solutions.

1.8. Rust

Rust is gaining attention to its speed, memory safety, and security features, making it an excellent choice for performance-critical cloud applications. While not as widely adopted as other languages on AWS, it’s becoming more common for high-performance microservices and systems programming on the cloud.

Read more: Rust vs C++: Which Language Reigns Supreme in 2025?

1.9. Swift

While primarily known as Apple’s programming language, Swift is entering cloud development with server-side frameworks like Vapor. Developers building iOS or macOS applications can now extend their backend services to AWS, making Swift a growing option for full-stack development.

1.10. Kotlin

Kotlin, a modern language for Android and JVM-based development, is becoming a strong player in cloud computing. It’s increasingly used for serverless functions, microservices, and backend development on AWS, particularly with AWS Lambda and Amazon API Gateway.

Each of these programming languages brings unique strengths to AWS cloud development. The best choice depends on your project’s needs, whether scalability, performance, ease of use, or compatibility with AWS services.

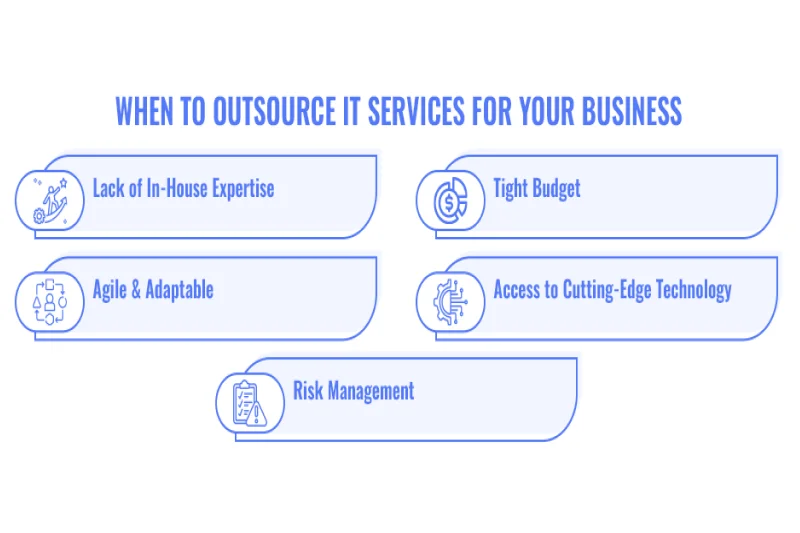

2. Choosing the best AWS language for your cloud application

Choosing the right AWS programming language is crucial in building a scalable, efficient cloud application. With so many options available, it’s important to consider factors like project requirements, AWS service compatibility, and developer expertise to make the best decision for your needs.

2.1. Key factors to consider

Picking the right AWS programming language depends on several factors. Here’s what you should keep in mind:

- Project Requirements & Scope – What are you building? A data-heavy machine learning app? A real-time web service? Your project’s complexity will guide your language choice.

- AWS Services You’re Using – Some languages work better with specific AWS services. For example, Python is a go-to for AWS Lambda, while Java is great for enterprise applications.

- Scalability & Performance Needs – If your application needs to handle high traffic and fast execution, languages like Go or Java can be solid choices.

- Developer Experience & Team Expertise – Choose a language your team is comfortable with. If your developers are JavaScript experts, Node.js might be the easiest transition for AWS development.

- Community Support & Resources – A strong community means better support, more tutorials, and easier troubleshooting. Popular languages like Python and Java have extensive AWS documentation and libraries.

- Security Considerations – Some languages offer built-in security features that might be crucial for your application. Rust, for instance, is known for its memory safety and reliability.

2.2. Matching AWS services with appropriate languages

AWS supports multiple programming languages, but some are a better fit for specific services:

- Python – Ideal for AWS Lambda, machine learning (SageMaker), and data analysis. Its vast ecosystem of libraries makes it a top choice for AI, automation, and cloud scripting.

- Java – A strong contender for enterprise-level applications and backend services on AWS. Works well with Amazon EC2, Elastic Beanstalk, and large-scale microservices.

- Node.js – Perfect for serverless computing and web applications. Commonly used with AWS Lambda, Amazon API Gateway, and DynamoDB to build scalable cloud solutions.

- Go – Best suited for cloud-native applications and microservices. It excels in AWS Lambda, Amazon ECS, and Kubernetes-based environments (EKS) where speed and concurrency matter.

By understanding your project’s needs and the strengths of each language, you can make an informed decision that ensures efficiency, scalability, and long-term success in your AWS cloud journey.

3. How AI and new technology may influence AWS language support?

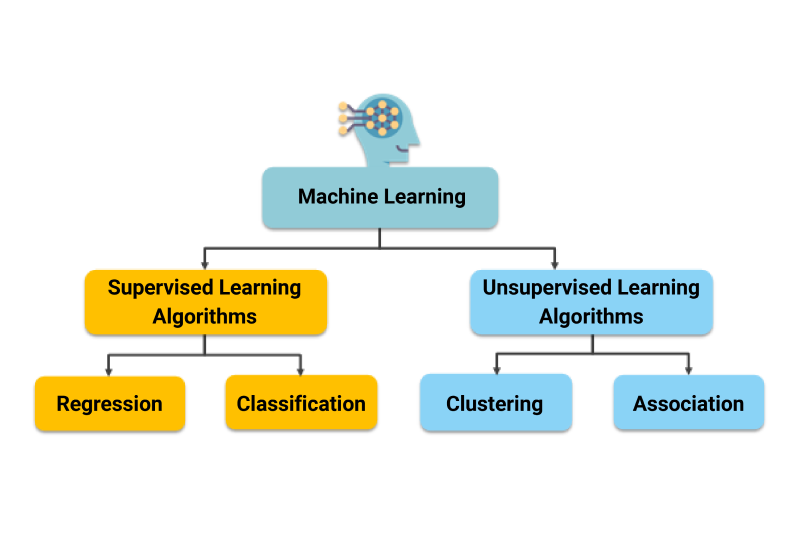

The rise of artificial intelligence and machine learning is also shaping the future of AWS programming. Languages with strong AI capabilities, like Python and Java, are seeing increased AWS support due to their robust ML frameworks (e.g., TensorFlow, PyTorch). As AI continues to integrate deeper into cloud applications, AWS may introduce enhanced tools and optimizations for AI-focused languages.

Additionally, AWS will likely expand its language support to accommodate new frameworks and technologies. We may see greater emphasis on specialized languages for AI, automation, and high-performance computing, ensuring developers have the right tools to build the next generation of cloud applications.

Read more: 13 Best AI Languages for Machine Learning & Deep Learning

4. Conclusion

The right AWS programming language is essential for building efficient, scalable, and future-proof cloud applications. Whether you need Python for machine learning, Java for enterprise solutions, or Node.js for serverless applications, the best choice depends on your project’s goals and AWS service compatibility.

Companies in today and age produce enormous volumes of data across various systems. However, data silos form a crevice so that accessing a unified view of critical information becomes challenging. Now,

Companies in today and age produce enormous volumes of data across various systems. However, data silos form a crevice so that accessing a unified view of critical information becomes challenging. Now,